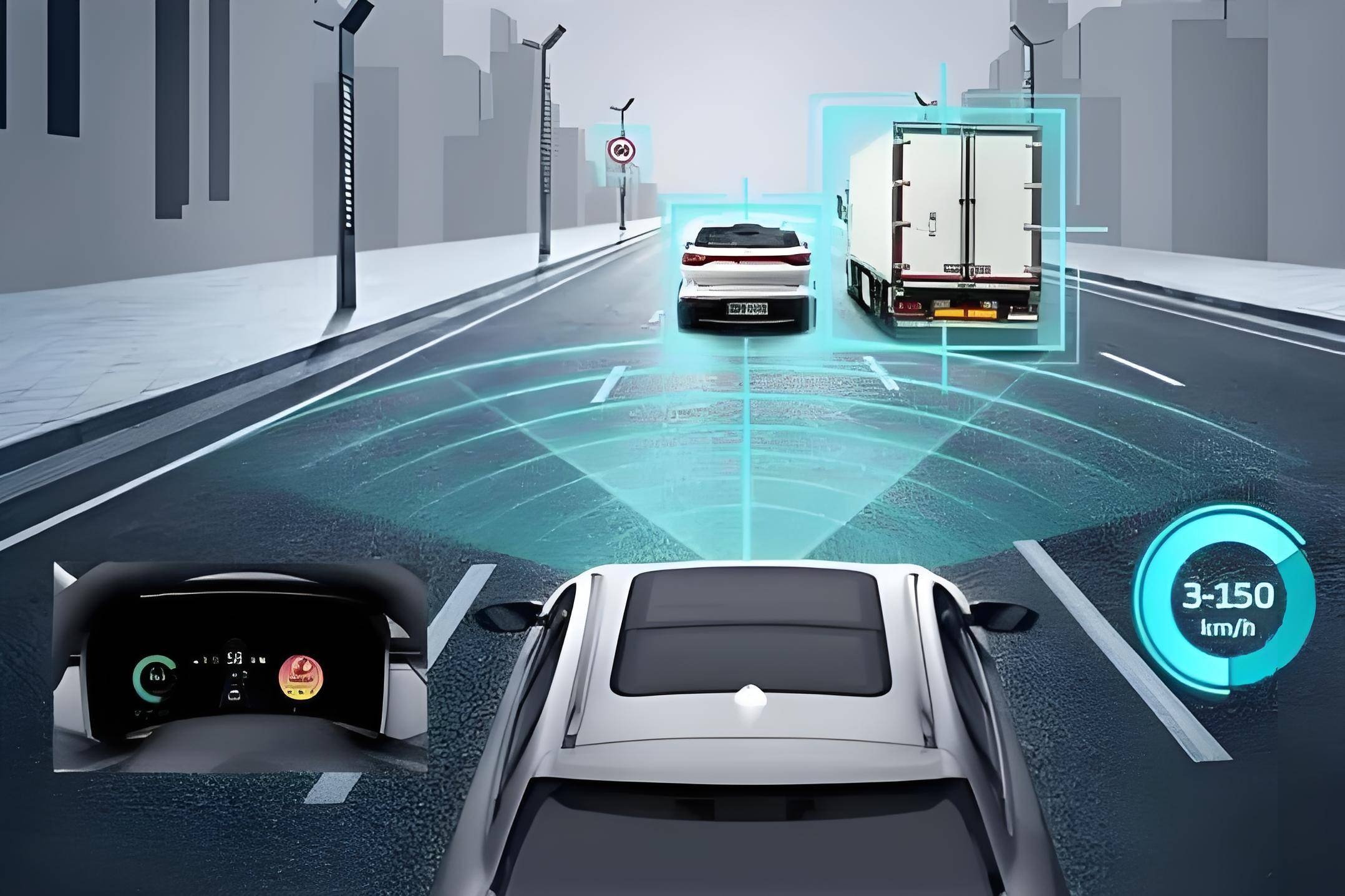

Based on the architecture of autonomous driving, the most easy-to-understand principle of autonomous driving is perception, decision-making, and execution.

Perception answers questions around you, like human eyes and ears. Get information about surrounding obstacles and roads through cameras, radars, maps, etc.

Decision-making answers to the question of how I want to do it, similar to the brain. By analyzing the perceived information, the path and vehicle speed are generated.

Execution is similar to hand and foot, converting decision-making information into brakes, accelerators and steering signals to control the vehicle to drive as expected.

Next, let's go deeper and the problem begins to be a little complicated. In our daily life, we may intuitively believe that I am always deciding on the information I see in the moment, but this is often not the case. There is always a time delay from the eyes to the head to the hands and feet, and the same is true for autonomous driving. But we never feel the impact is because the brain automatically handles the "predictions". Even if it is only a few milliseconds, our decisions guide our hands and feet based on predictions of what we see, which is the basis for maintaining normal functions. Therefore, we will add the prediction module before making decisions on autonomous driving. The perception process also hides the universe, and careful consideration is also divided into two stages: "sensing" and "perception". "Sensing" obtains the original data of the sensor, such as a picture, while "perception" is useful information processed from picture a (such as how many people are in the picture). An old saying often says, "Seeing is real, and hearing is empty." The useful information of "perception" can continue to be divided into bicycle perception and external perception. People or autonomous vehicles often have different strategies when processing these two categories of information.

Bicycle perception - information obtained by the sensor organs every moment (including cameras, radars, GPS, etc.)

External perception - The information transmitted after processing (including positioning, maps, vehicle connection information, etc.) is collected and transmitted by external agents or past memories, and the premise is that the input of bicycle positioning perception (GPS) is required.

In addition, various types of sensors often have contradictions in information such as obstacles, lanes, etc. after processing through algorithms. The radar saw an obstacle in front of him and the camera told you that it was not. At this time, the "fusion" module needs to be added. Make further correlation and judgment on inconsistent information. Here we often summarize "fusion and prediction" as "world model". This word is very vivid, whether you are materialistic or idealistic. The "world" cannot be stuffed into your mind, but what guides our work and life is the "model" of the "world", that is, the so-called processing of what we see after birth, and the countermeasures are gradually constructed in the mind The understanding of the world is called "inner scene" by Taoism. The core responsibility of the world model is to understand the attributes and relationships of current environmental elements through "fusion", and make "prediction" in conjunction with "priority laws" to provide a more relaxed judgment for decision execution. This time span can range from milliseconds to several. Hour. Thanks to the addition of the world model, the entire architecture has become more full, but there is another detail here that is often overlooked. That is, the flow of information. To a simple understanding, people use their eyes to sense and then to the brain to process the one-way process that is finally handed over to the hands and feet to perform, but the actual situation is often more complicated. Here are two typical behaviors that form a completely opposite flow of information, namely "a plan for goal achievement" and "a diversion of attention". How to understand the "plan for achieving goals"? In fact, the beginning of thinking is not perception but "target". Only with a goal can you trigger a meaningful "perception-decision-execution" process. For example, you want to drive to a destination, you may know how many routes there are, and you will end up weighing the congestion and choosing one of them. Congestion is a world model, and “reaching the destination” is a decision. This is a process of passing decisions to the world model. How to understand "distraction"? Even a picture cannot obtain all the information hidden inside, either human or machine. From a demand and context, we tend to focus on a limited range and limited category. This information cannot be obtained from the picture itself, but comes from the "world model" and "goal", which is a process of transferring from decision-making to the world model and then to perception. We add some necessary information and reorganize the entire architecture. It becomes the following, isn’t it more complicated?We'll continue to watch it before it's over. Self-driving algorithms, like the brain, have a requirement for processing time. The general cycle is between 10ms and 100ms, which can meet the response requirements for environmental changes. But the environment is sometimes simple and sometimes very complex. Many algorithm modules cannot meet this time requirement. For example, thinking about the meaning of life may not be something that can be done in 100ms. If you have to think about life every step you take, it will definitely be a kind of destruction to the brain. The same is true for computers, with physical limitations on computing power and computing speed. The solution is to introduce a hierarchical framework. The higher the processing cycle of this layering mechanism, the higher the processing cycle, it will generally be shortened by 3-10 times. Of course, it does not necessarily need to appear in the actual framework intact. The engineering can be flexibly adjusted according to the resources on the board and the use of algorithms. Basically, perception is an upward process, constantly refines specific elements based on attention, and provides "deep and directional" perceptual information. Decision is a downward process, decomposing actions from the target to each execution unit layer by layer according to different levels of world models. World models generally have no specific flow direction and are used to construct environmental information at different granular scales. Depending on the complexity of the processing task, the division of labor of personnel and the communication environment will also be appropriately castrated and merged. For example, low-order ADAS function (ACC) has less computing power and can only design one layer. Advanced ADAS functions (AutoPilot) generally have two-layer configurations. The autonomous driving function has many complex algorithms, and the three-layer design is sometimes necessary. In software architecture design, there is also a situation where the same layer of world model is merged with perception or decision modules.

All kinds of autonomous driving companies or industry standards will release their own software architecture designs, but they are often castrated according to the current situation and are not universal. However, in order to facilitate everyone's understanding, I will still substitute the current mainstream functional modules. , Let’s take a look at the comparison relationship, which is more helpful for understanding the principles. It is necessary to note in advance here. Although this is a bit of a software architecture, it is still a description of the principle. The actual software architecture design is more complicated than this. There is no details here, but The confusing part of the focus has been expanded. Let’s focus on sorting out the following. Environmental awareness-ALL IN Deep Learning In order to ensure the understanding and grasp of the environment of the unmanned vehicle, the environmental perception part of the unmanned driving system usually needs to obtain a large amount of information about the surrounding environment, including the location of obstacles, speed, and the precise shape of the lane ahead. The location type of signboard, etc. This information is usually obtained by fusion of data from various sensors such as Lidar, Camera, Millimeter Wave Radar, etc. The development of deep learning has made it a consensus in the entire industry to complete the construction of autonomous driving through neural network algorithms. The algorithm of the perception module is the "pawn" of the entire deep learning and is the earliest software module to complete the transformation. The relationship and difference between positioning map and V2X-Bicycle perception and external perception is traditionally understood. External perception is based on GPS positioning signals, and information under absolute coordinate systems such as high-precision maps and Internet of Vehicles messages (V2X) is used. Convert to the perceptual source for vehicle use under the bicycle coordinate system. Similar to the Gaode Navigator used by people. Cooperating with the "bicycle perception" information that was originally under the bicycle coordinate system, it provides environmental information for autonomous driving. However, the actual design is often more complex. Since the GPS is unreliable, the IMU needs to be continuously corrected. Mass-produced autonomous driving positioning often uses the matching of the perceptual map to accurately obtain the exact absolute position, and uses the perceptual results to correct the IMU to obtain the exact relative The position and the INS system composed of GPS-IMU form redundancy. Therefore, the positioning signals necessary for "external perception" often rely on "bicycle perception" information. In addition, although maps are strictly part of the "world model", due to the sensitivity of GPS, during the domestic software implementation, the positioning module and map module will be integrated and all GPS data will be added. Ensure that there is no leakage of sensitive location information. Fusion prediction module - The core focus on the difference between the two. The core of the fusion is to solve two problems. One is the time and space synchronization problem. Using coordinate system conversion algorithm and soft and hard time synchronization algorithm, firstly, lidar, camera and millimeter wave radar are used. The perceptual measurement results are aligned to a space-time point to ensure the unification of the entire environment's perception of the original data. Another is to solve the problem of association and exception culling, deal with associations that different sensors map to the same "world model" element (one person/one lane, etc.), and eliminate exceptions that may be caused by misdetection of a single sensor. But what is different from prediction is that it only processes information from past and current moments and will not deal with external moments. The prediction will make a judgment on the future moment based on the results of the fusion, and this future moment will be available from 10ms to 5 minutes. This includes prediction of signal lights, prediction of the driving path of surrounding obstacles, or prediction of the remote cornering position. Prediction of different cycles will give planning of corresponding cycles and make predictions of different granularities, thus providing greater space for planning adjustments. Planning Control - Hierarchical Strategy Decomposition Planning is a process in which unmanned vehicles make some purposeful decisions for a certain goal. For unmanned vehicles, this goal usually refers to reaching the destination from the starting point and avoiding obstacles. and continuously optimize driving trajectory and behavior to ensure the safety and comfort of passengers. The structural summary of the planning is to integrate information from different granularity, evaluate and decompose layer by layer from external goals, and finally pass it to the executor to form a complete decision. In terms of segmentation, the planning module is generally divided into three layers: Mission Planning, Behavioral Planning and Motion Planning. The core of task planning is to obtain the global network based on road network and discrete path search algorithm. The path is given a large-scale task type, and often has a long period. The behavioral planning is based on the finite state machine to judge the specific behavior that the vehicle should take in a medium cycle (left lane change, bypass avoidance, E-STOP) and set Define some boundary parameters and approximate path ranges. The motion planning layer often ends up with the only path to meet comfort and safety requirements based on sampling or optimization methods. Finally, the control module completes the following of the unique path through the feedforward prediction and feedback control algorithm, and operates the brake, steering, accelerator, body and other actuators to finally execute commands. I don’t know which level you have understood, but the above are just the introductory content of the principle of autonomous driving. The current theory, algorithm and architecture of autonomous driving are developing very fast. Although the above content is a relatively original knowledge point, it is very long. Time will not be outdated. However, the new demands have brought a lot of new understanding to the architecture and principles of autonomous driving.